nginx understanding and cross-domain solution

Nginx is a lightweight web server and reverse proxy server. Due to its small memory footprint, extremely fast startup, and strong concurrency capability, it is widely used in Internet projects.

Forward proxy and reverse proxy

Forward proxy:

As shown in the figure above, due to the existence of the firewall, we cannot directly access Google. At this time, we can bypass the firewall through the VPN. In this process, the Client understands the destination that his request needs to reach, but this The request will be proxied by the VPN, so the server actually does not know where the request actually comes from, and the forward proxy is the Client.

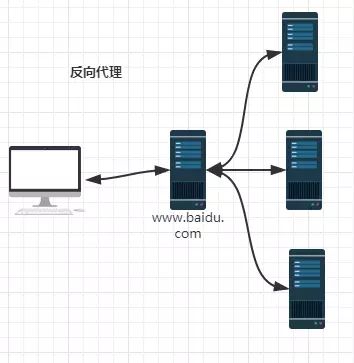

Reverse proxy:

The reverse proxy proxy is the server. For the Client, he does not know where his request ends up and which server handles it. He just sends the request to the proxy server, and the reverse proxy server sends the request to the proxy server. Another server in the intranet handles it. The process of reverse proxy is transparent to the Client.

Nginx modules and working principles

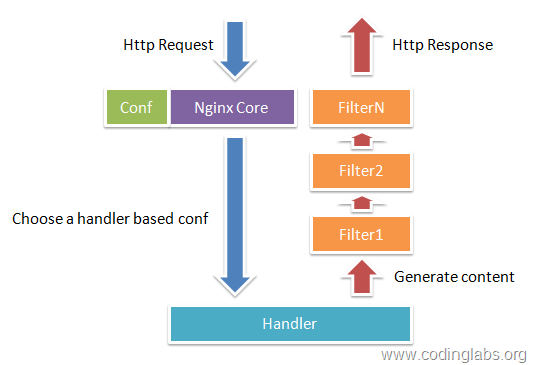

Nginx consists of internal cores and modules. The kernel is very simple, just mapping Client requests to a location block by looking up the configuration file.

Nginx modules are structurally divided into core modules, basic modules, and third-party modules.

Core modules: HTTP module, EVENT module, MAIL module

Basic modules: HTTP Access module, HTTP FastCGI module, HTTP Proxy module and HTTP Rewrite module

Third-party modules: HTTP Upstream Request Hash module, Notice module, and HTTP Access Key module.

Nginx modules are functionally divided into the following three categories.

Handlers (processor modules). This type of module directly processes requests and performs operations such as outputting content and modifying headers information. There is generally only one Handlers processor module.

Filters (filter modules). This type of module mainly modifies the output of other processor modules and is finally output by Nginx.

Proxies (proxy module). This type of module is a module such as HTTP Upstream of Nginx. These modules mainly interact with backend services such as FastCGI, and implement functions such as service proxy and Load Balance.

So in fact, nginx itself only maps the request to different locations according to the configuration file, and then returns the processing result.

Nginx Process Model

After nginx is started, there will be a master process and multiple worker processes. The master process is mainly used to manage the worker process, including: receiving signals from the outside world, sending signals to each worker process, monitoring the running status of the worker process, and automatically restarting the new worker process when the worker process exits (in abnormal cases). The basic network events are handled in the worker process. Multiple worker processes are equal, they compete equally for requests from clients, and each process is independent of each other. A request can only be processed in a worker process, and a worker process cannot handle requests from other processes. The number of worker processes can be set. Generally, we will set it to be consistent with the number of machine cpu cores. The reason for this is inseparable from the nginx process model and event handling model.

But if there are multiple workers, when a request comes in, all worker processes can listen to the request, how to choose a process to handle the request?

In response to this situation, nginx provides mutual exclusion. Only when you get the lock can you accept requests

Thermal deployment principle

After modifying the configuration file nginx.conf, regenerate the new worker process. Of course, the request will be processed with the new configuration, and the new request must be handed over to the new worker process. As for the old worker process, wait for those previous requests. After processing, kill it.

Configuration file

1 | main |

The configuration file of nginx is mainly divided into six parts, namely global configuration (main), nginx working mode (events), http settings (http), host settings (server), URL matching (location), Load Balance server settings (upstream)

We often use http, server, and location.

HTTP module

1 | http{ |

Include: Used to set the mime type of the file. The type is defined in mime.type in the configuration file directory to tell nginx to recognize the type of the file

default_type: Set the default type to binary

log_format: Set the log format and record which parameters

access_log the file address used to record each access log, followed by main is the log format style, corresponding to the log_format main.

The sendfile parameter is used to enable efficient file transfer mode. Set the tcp_nopush and tcp_nodelay directives to on to prevent network congestion.

keepalive_timeout set a timeout for the Client connection to remain active. After this time, the server closes the connection.

Server module

1 | server { |

The server flag defines the start of the virtual host.

Listen is used to specify the server level port of the virtual host.

server_name used to specify an IP address or domain name, separated by a space.

Root represents the entire root web root directory within the entire server virtual host. Note that it should be distinguished from the one defined under locate {}.

Index globally defines the default homepage address for access. Note that it should be distinguished from the one defined under locate {}.

Charset is used to set the default encoding format for web pages.

access_log used to specify the access log storage path for this virtual host, and the final main is used to specify the output format of the access log.

Location module

1 | location / { |

Location/means matching the access root directory.

The root directive is used to specify the web directory of the virtual host when accessing the root directory. This directory can be a relative path (relative path is relative to the installation directory of nginx). It can also be an absolute path.

Index is used to set the default home page address that we only access after entering the domain name. There is a sequence: index.php index.html index.htm. If the directory view permission is not enabled and these default home pages cannot be found, a 403 error will be reported.

Upstream module

Upstream module Load Balance module, through a simple scheduling algorithm to achieve Client IP to the backend server Load Balance.

1 | upstream iyangyi.com{ |

Inside is ip_hash This is one of the Load Balance scheduling algorithms, which will be introduced below. Followed by various servers. Identify with the server keyword, followed by ip.

The Load Balance module of Nginx currently supports 4 scheduling algorithms:

- weight polling (default). Each request is assigned to a different backend server one by one in chronological order. If a server in the backend is downtimed, the faulty system will be automatically removed, leaving user access unaffected. Weight. Specify the polling weight value. The higher the weight value, the higher the probability of access assigned. It is mainly used when the performance of each server in the backend is uneven.

ip_hash. Each request is assigned according to the hash result of the access IP, so that visitors from the same IP can access a backend server, which effectively solves the problem of session sharing in dynamic web pages. - fair. More intelligent than the above two Load Balance algorithm. This algorithm can be based on the page size and load time to intelligently Load Balance, that is, according to the response time of the backend server to allocate requests, the response time is short priority allocation. Nginx itself does not support fair, if you need to use this scheduling algorithm, you must download the upstream_fair module of Nginx.

url_hash. Allocating requests according to the hash result of the access url, so that each url is directed to the same backend server, which can further improve the efficiency of the backend cache server. Nginx itself does not support url_hash. If you need to use this scheduling algorithm, you must install the Nginx hash package.

Nginx solves cross-domain problems

The debugging page is: http://192.168.1.2:8080/

The requested interface is: http://sun668.vip/api/get/info

Step 1:

The requested interface is: http://192.168.1.2/api/get/info

PS: This solves the cross-domain problem.

Step 2:

Modify the nginx.conf file:

1 | server{ |

Reference documents:

https://www.zybuluo.com/phper/note/89391

https://blog.csdn.net/mine_song/article/details/56678736

https://blog.csdn.net/hguisu/article/details/8930668