Blob, Multipart Upload

What is Blob?

A blob (Binary Large Object) represents a large object of binary type. In database management systems, binary data is stored as a collection of a single individual. A blob is usually a video, sound, or multimedia file. ** In JavaScript, an object of type Blob represents the original data source of an immutable file-like object. **

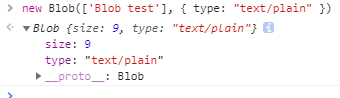

As you can see, the myBlob object contains two properties: size and type. The’size ‘property is used to represent the size of the data (in bytes), and’type’ is a string of MIME type. Blobs do not necessarily represent data in JavaScript native format. For example, the’File ‘interface is based on’Blob’, inheriting the functionality of blob and extending it to support files on the user’s system.

Blob

A blob consists of an optional string type (usually a MIME type) and blobParts:

MIME(Multipurpose

Common

The relevant parameters are described as follows:

blobParts: It is an array of ArrayBuffer, ArrayBufferView, Blob, DOMString, etc. DOMStrings will be encoded as UTF-8.

options: An optional object with the following two properties:

- type - The default value is’ “” ', which represents the MIME type of the array content that will be placed into the blob.

- endings - The default value is’ “transparent” ‘, which is used to specify how strings containing line terminators’\ n ‘are written. It is one of the following two values:’ “native” ‘, which means that the line terminator will be changed to a newline for the host operating system file system, or’ “transparent” ', which means that the terminator saved in the blob will remain unchanged.

Property

We already know that Blob objects contain two properties.

- size (read-only): Represents the size, in bytes, of the data contained in the Blob object.

- type (read-only): A string indicating the MIME type of the data contained in the’Blob 'object. If the type is unknown, the value is an empty string.

Method

- slice ([start [, end [, contentType]]]): Returns a new blob object containing the data in the specified range in the source blob object.

- stream (): Returns a ReadableStream that reads the contents of the blob.

- text (): Returns a Promise object containing all the contents of the blob as a’USVString 'in UTF-8 format.

- arrayBuffer (): Returns a Promise object containing all the contents of the blob in binary format ArrayBuffer.

Here we need to note that ** ‘Blob’ objects are immutable **. We cannot directly change data in a blob, but we can split a blob, create new blob objects from it, mix them into a new blob. This behavior is similar to JavaScript strings: we cannot change the characters in the string, but we can create new corrected strings.

Large Multipart Upload (Vue)

Client section

Upload slice

First, implement the upload function. Uploading requires two things

- Slicing files

- Transfer slices to server level

The File here actually inherits the Blob object.

1 | <template> |

When clicking the upload button, call’createFileChunk 'to slice the file. The number of slices is controlled by the file size. Set 10MB here, that is to say, a 100 MB file will be divided into 10 slices

Use the while loop and slice method inside createFileChunk to put the slice into the’fileChunkList 'array

When generating file slices, you need to give each slice an identifier as a hash. Here, temporarily use’filename, subscript ', so that the backend can know which slice the current slice is, which is used for subsequent merged slices

Then call’uploadChunks’ to upload all the file slices, put the file slice, slice hash, and file name into FormData, then call the previous’request 'function to return a proimise, and finally call Promise.all to upload all the slices concurrently

Send Merge Request

The second way of merging slices mentioned in the overall idea is used here, that is, the front end actively informs the server level to merge, so the front end needs to send an additional request, and the server level actively merges slices when receiving this request

1 | <template> |

Server level part

Simply use http module to build server level

1 | const http = require("http"); |

Accept slices

Use the’multiparty 'package to process FormData from the frontend

In the callback of multiparty.parse, the files parameter saves the files in FormData, and the fields parameter saves the fields of non-files in FormData

1 | const http = require("http"); |

Look at the chunk object processed by multiparty, path is the path to store the temporary file, size is the temporary file size, it is mentioned in the multiparty doc that fs.rename can be used (because I use fs-extra, its rename method windows platform permission problem, so it was replaced by fse.move) to move the temporary file, that is, move the file slice

When accepting file slices, you need to create a folder to store the slices first. Since the front end additionally carries a unique value hash when sending each slice, use hash as the file name to move the slice from the temporary path to the slice folder.

Merge slices

After receiving the Merge Request sent by the frontend, the server level merges all the slices under the folder

1 | const http = require("http"); |

Since the front end will carry the file name when sending the Merge Request, the server level can find the slice folder created in the previous step according to the file name

Then use fs.createWriteStream to create a writable stream. The writable stream file name is the slice folder name, and the suffix name is combined

Then traverse the entire slice folder, create a readable stream of the slice through fs.createReadStream, and merge the transfer into the target file

It is worth noting that each readable stream will be transmitted to the specified position of the writable stream, which is controlled by the second parameter start/end of createWriteStream, in order to be able to concurrently merge multiple readable streams into the writable stream, so that even if the order of the stream is different, it can be transmitted to the correct position, so here we also need to let the front end provide an additional size parameter when requesting

1 | async mergeRequest() { |

Other usage scenarios

We can download data from the internet and store it in a blob object using the following methods, for example:

1 | const downloadBlob = (url, callback) => { |

Of course, in addition to using the’XMLHttpRequest ‘API, we can also use the’fetch’ API to obtain binary data in a streaming manner. Here we take a look at how to use the fetch API to obtain online images and display them locally. The specific implementation is as follows:

1 | const myImage = document.querySelector('img'); |

When the fetch request succeeds, we call the’blob () 'method of the response object, read a blob object from the response object, then use the’createObjectURL () ’ method to create an objectURL, and assign it to the’src ‘attribute of the’img’ element to display the image.

Blob

Blob can easily be used as a URL for < a >, < img >, or other tags. Thanks to the type attribute, we can also upload/download blob objects. Below we will give an example of blob file download, but before looking at the specific example, we need to briefly introduce blob URLs.

1.Blob URL/Object URL

Blob URL/Object URL is a pseudo-protocol that allows Blob and File objects to be used as URL sources for images, download binary data links, etc. In browsers, we create Blob URLs using the URL.createObjectURL method, which takes a Blob object and creates a unique URL for it in the form of blob: < origin >/< uuid >. The corresponding example is as follows:

1 | blob:https://example.org/40a5fb5a-d56d-4a33-b4e2-0acf6a8e5f641 |

The browser internally stores a URL → Blob mapping for each URL generated through URL.createObjectURL. Therefore, such URLs are shorter, but’Blob ‘can be accessed. The generated URL is only valid in the current doc open state. It allows referencing’Blob’ in ‘< img >’, ‘< a >’, but if the Blob URL you access no longer exists, you will receive a 404 error from the browser.

The above blob URL seems pretty good, but in fact it also has side effects. Although the mapping of URL → blob is stored, the blob itself still resides in memory and the browser cannot release it. The mapping is automatically cleared when the doc is uninstalled, so the blob object is then released.

However, if the application has a long lifespan, that won’t happen anytime soon. Therefore, if we create a blob URL, it will still exist in memory even if the blob is no longer needed.

To solve this problem, we can call the URL.revokeObjectURL (url) method to remove the reference from the internal mapping, allowing the blob to be deleted (if there are no other references) and freeing up memory. Next, let’s look at a specific example of blob file download.

** 2. Blob file download example **

index.html

1 |

|

index.js

1 | const download = (fileName, blob) => { |

In the example, we create a Blob object of type “text/plain” by calling the Blob constructor function, and then download the file by dynamically creating the “a” tag.

More usage

More usages can be referred to. 你不知道的Blob。

Reference link: